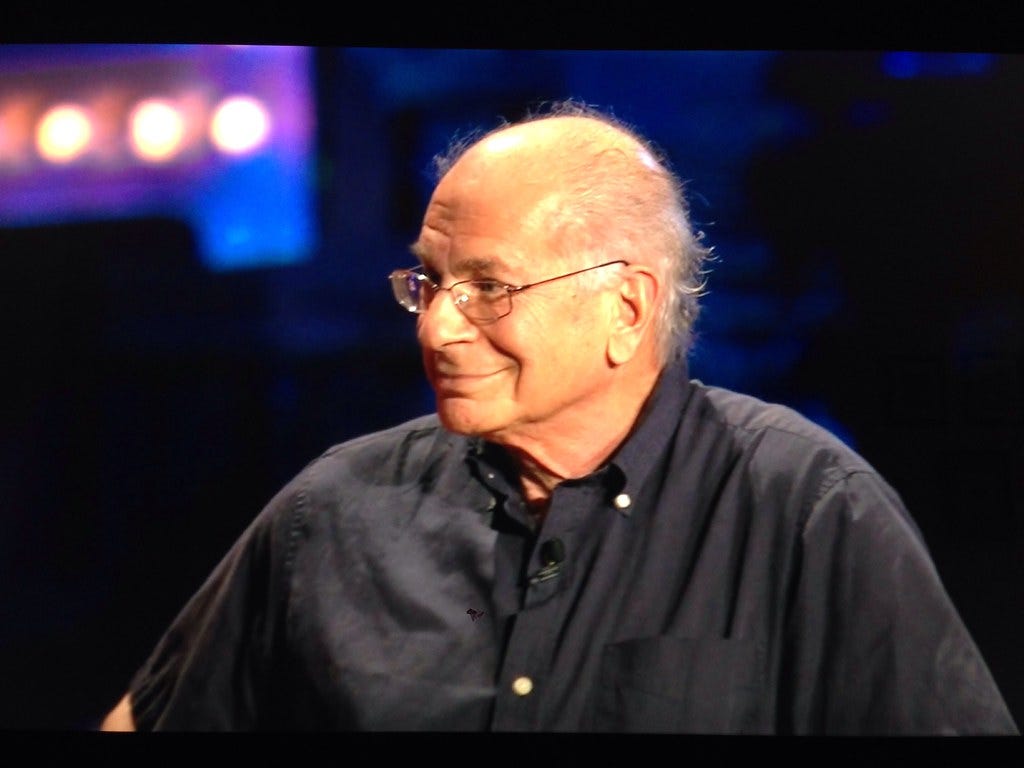

"8:36pm Watching the awesome Daniel Kahneman talk about the tyranny of the remembering self" by Buster Benson is licensed under CC BY-SA 2.0

In 2002, an Israeli thinker called Daniel Kahneman was awarded the Nobel Prize for Economics. This was an extraordinary achievement since he isn’t an economist. Instead, he is a psychologist, who has studied to what extent humans have an innate grasp of statistics and probabilities. His work, which is part of a field called behavioural economics, has questioned the assumption behind modern economics that we are fully rational. He popularized the research on how we really take decisions and form judgements in a bestselling book called Thinking, Fast and Slow, which was first published in 2011. His findings are very important to anyone interested in improving their understanding of the world.

There is a metaphor at the core of Kahneman’s book. He said our brains act in two very different ways. He calls them System 1 and System 2. Most of the time we use System 1. It is fast and instinctive and helps us take snap decisions. It works very well when we are faced by an emergency, but is riddled with cognitive biases. One of these is confirmation bias - we tend to favour information that backs up our existing views. This means that System 1 makes us very bad natural statisticians. On the other hand, getting System 2 to fire up is hard work - it is associated with feelings of concentration and effort. Try to remember how you feel the last time you had to fill in a complex form (System 2) and compare that to a time you had to react suddenly to potential danger (System 1).

My free book on critical-thinking skills, Sharpen Your Axe, is based on a Bayesian approach. This involves taking a first guess, holding it lightly and then trying to adjust it based on the evidence. The research of Kahneman and his peers shows us why our first guess is likely to be wrong if we are dealing with a complex issue. At the same time, people with rigid and inflexible worldviews often make guesses about conspiracies to protect their opinions from contradictory evidence. Cognitive dissonance - doubling down on an idea when faced with contradiction - can take people who do this into very strange directions.

The idea that our first guesses are likely to be wrong is a hard lesson. It helps explain why education tends to insulate people against conspiracy theories, even though it can never be a perfect cure for something that appears to be deeply wired in our brains. I explore this issue in much greater depth in Chapter Five of Sharpen Your Axe. If you missed the earlier chapters, here are Chapter One, Chapter Two, Chapter Three and Chapter Four. I hope you find them interesting!

I decided to make this project free because I think it’s a shame that ideas like Bayesian statistics and cognitive biases aren’t better known among the general public. It would be greatly appreciated if you could share this post on social media or send it to friends who might need the information. Also, if you haven’t signed up for my mailing list yet, please do so. The next post will be on Saturday 16th January and will look at why utter certainty is so attractive. See you then!

Update (25 April 2021)

The full beta version is available here

[Updated on 10 March 2022] Opinions expressed on Substack and Twitter are those of Rupert Cocke as an individual and do not reflect the opinions or views of the organization where he works or its subsidiaries.